Role

UX/UI designer

Target

B2C/B2B

Year

2019

Duration

6 months

Skills

Sketch

Post-it

Apeer empowers scientists, researchers, and students in biomedical and life sciences to analyze microscopy images without coding. Through AI-powered, cloud-based tools, users can label data, train models, and extract insights in days instead of weeks. The platform combines an AI-assisted annotation tool with a 2D segmentation workflow, automating image analysis and accelerating research with intuitive, no-code precision.

Introduce a complex machine learning workflow, annotate, train, and segment, to non-technical users. The challenge had two parts: first, accelerate dataset creation with an AI-assisted auto-annotation tool; second, guide users through an end-to-end 2D segmentation workflow so they could train a model and apply it to new images for real insights. The goal was explicit: to reduce the journey from raw images to usable segmentation results from weeks to days, and make AI feel less like a black box and more like a powerful, accessible tool.

My Role & Responsibilities

I was the sole designer on this MVP, responsible for the full UX/UI process—from research and concept ideation to high-fidelity prototypes and developer handoff.

I collaborated closely with product managers and ML engineers to ensure feasibility and alignment with Apeer’s existing infrastructure.

My contribution focused on two critical experiences:

Designing the entire annotation, training and segmentation user experience flow

Designing an AI-assisted annotation tool that learns from a few user-labelled examples.

To deeply understand the user experience around machine learning in microscopy, I conducted interviews with 12 participants across different levels of technical expertise. After each session, I mapped out the insights on a shared board using post-its, organizing observations around key moments in the workflow. This visual mapping allowed patterns and recurring pain points to emerge quickly.

Through this process, I identified a clear sequence of core steps users needed to go through: annotating datasets, training the model, receiving augmented data, processing 2D segmentations, and finally extracting insights. Each of these steps came with its own set of mental models, expectations, and areas of uncertainty.

To make sense of the complexity, I created a synthesis of the interviews that distilled user goals, frustrations, and informational gaps. This synthesis helped me uncover what users needed to know at each step and where they struggled most—especially with confidence, understanding outcomes, and knowing what would happen next.

I then built a detailed task analysis of the entire machine learning process, breaking it down step by step from the user's point of view. This task analysis became a foundational reference that guided design decisions throughout the project. It helped me align the interface with users’ mental models and ensure that each part of the experience felt purposeful, clear, and supportive—even within the tight constraints of our MVP.

As a UX designer, I synthesized all the interviews to create an ideal workflow representation, which served as a blueprint for the entire experience. I broke down the workflow into distinct tasks to ensure clarity and efficiency at each step. This task analysis distills the ML workflow into three actionable jobs—Annotate, Train, and Process (for Segmentation). For each phase, I identified core tasks, cognitive load, and pain points, then streamlined micro‑interactions to reduce repetition and decision fatigue. The result: non‑technical users can label a small image set, fine-tune parameters with guided defaults, and batch-process thousands of images—all while feeling confident and in control

This feature was the first major innovation. Users begin by manually labelling a few sample regions—cell membranes, nuclei, organelles—and the AI immediately starts predicting similar regions across the image.

This auto-annotation tool not only speeds up dataset creation but also improves model accuracy by capturing more consistent labelling patterns.

Annotate

In the initial phase of the machine learning workflow, I focused on creating a user-friendly annotation interface that simplifies the process of labeling images for AI training. Understanding that accurate annotations are critical for model performance, I designed tools that allow users to effortlessly draw bounding boxes and apply labels. To enhance efficiency, I incorporated features such as keyboard shortcuts and zoom functionalities, enabling users to navigate and annotate large datasets with ease. The interface was tested with end-users to ensure that it met their needs and allowed for quick, precise annotations, laying a solid foundation for the subsequent training phase.

A full demostration of the annotation tool can be seen here.

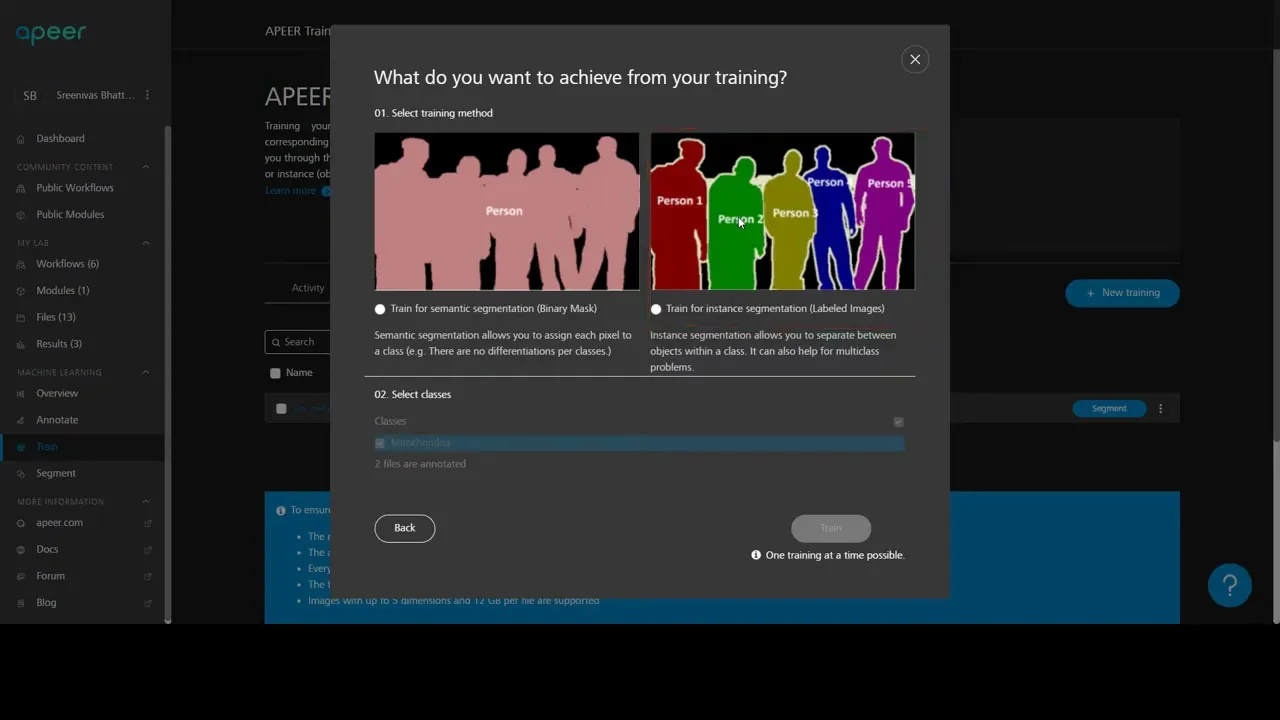

Train

Transitioning to the training phase, I aimed to demystify the model training process for users by providing clear, actionable feedback. The interface displays real-time updates on training progress, including metrics like accuracy and loss, presented through intuitive visualizations. Users can easily adjust parameters and initiate retraining, fostering an interactive environment that encourages experimentation and learning. By simplifying complex processes into comprehensible steps, the design empowers users to engage confidently with model training, regardless of their technical background.

Before hitting “Train,” users often spent 10–15 minutes labeling just a few examples. Thanks to our AI-assisted annotation feature, that time produced far more annotated data than traditional tools — allowing users to go from raw images to trained model in a single session.

Sneak peek of training phase

Apeer tutorial for training phase

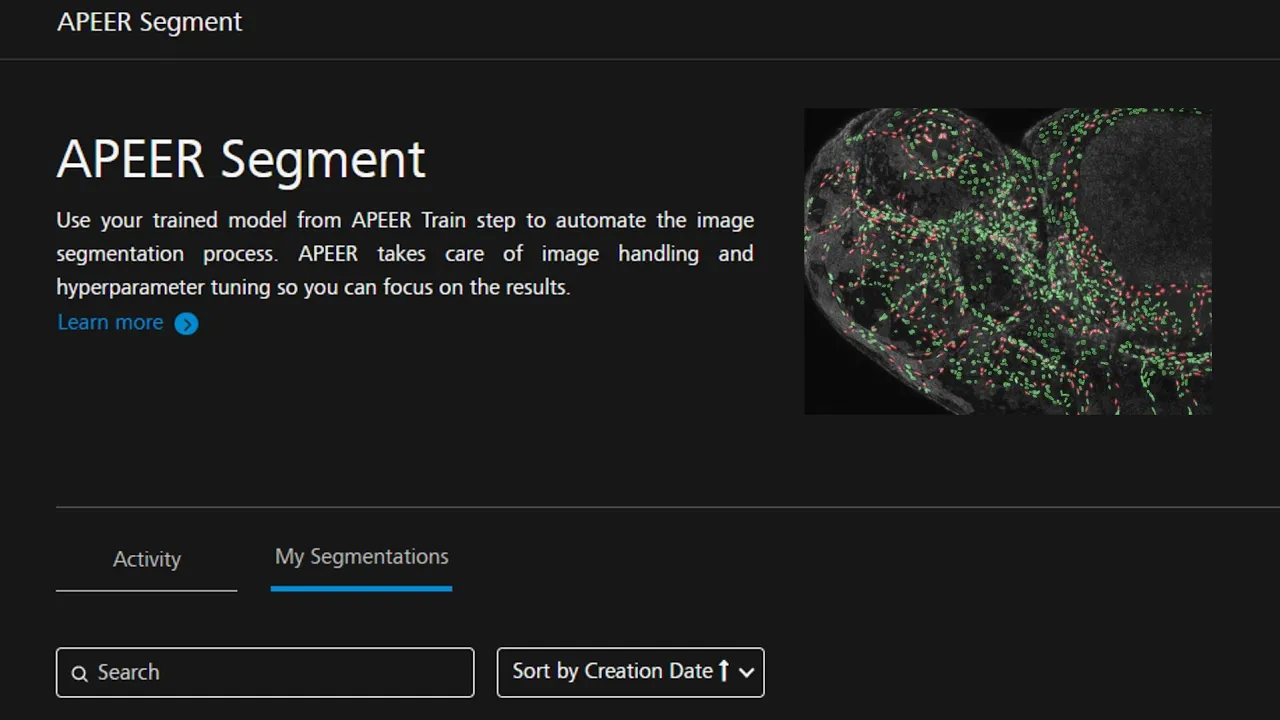

Segment

In the final segmentation phase, the focus was on enabling users to apply trained models to new data effectively. The interface allows users to upload images and view segmentation results, with options to fine-tune outputs as needed. I integrated features that let users compare original images with segmented outputs side by side, facilitating a deeper understanding of model performance. This design ensures that users can validate and refine segmentation results, closing the loop in the machine learning workflow and promoting continuous improvement.

Apeer tutorial for segmentation phase

Validation & Testing

In the final segmentation phase, the focus was on enabling users to apply trained models to new data effectively. The interface allows users to upload images and view segmentation results, with options to fine-tune outputs as needed. I integrated features that let users compare original images with segmented outputs side by side, facilitating a deeper understanding of model performance. This design ensures that users can validate and refine segmentation results, closing the loop in the machine learning workflow and promoting continuous improvement.

We conducted usability tests with six researchers and students.

Results showed that the combination of AI-assisted annotation and guided training improved efficiency and trust significantly.

Quantitative improvements:

Time to generate a labeled dataset reduced from weeks to days

70 % of participants successfully trained and applied a model

80 % described the process as “intuitive” or “surprisingly easy